In this post we will talk about certain User Interface (UI) technological advances that we are observing at the moment. One such development was revealed in a recent media event conducted by Microsoft, where they announced the Microsoft HoloLens, a computing platform which achieves seamless connection between the digital and the physical world, quite similar to the experience referred to in certain movies in the past.

It is interesting to note that the design of the HoloLens device looks so similar to something we have seen before.

OMG. Marty McFly used Microsoft’s #HoloLens in Back to the Future II, which takes place in… 2015! #BTTF #Win10 pic.twitter.com/7udvvs5cp8— Roderick Vonhogen (@FatherRoderick) January 21, 2015

Even the vision of holographic computing and users interacting with such interfaces isn’t a new one. The 2002 movie “The first $20 million is always the hardest” was possibly the first time we saw how such a futuristic technology might look like.

How did we reach here? A brief discussion on UIs…

User interfaces have always been an important aspect of computers. In its early days computers had a monochromatic screen (or at-most a duo-chromatic screen). A user would type in commands into the screen and computers would execute said commands. Since the commands would be entered in a single or a series of lines, this interface was called the Command-Line Interface (CLI).

Such an interface was not particularly intuitive as you had to know the list of commands that would fulfill a certain task. Albeit a certain group of individuals i.e. geeks and some computer programmers, like me, prefer such an interface owing to its clean and distraction free nature. However, owing to the learning curve of CLIs, researchers at Stanford Research Institute and Xerox PARC research center invented a new User interface called the Graphical User Interface (GUI). There were a few variations of the GUIs for example the point and click type also known as WIMP (windows, icons, menus, pointer) UI created at the Xerox PARC research center and made popular by Apple through it’s Macintosh operating systems

And also adopted by Microsoft in its Windows operating systems

Some early versions even included a textual user interface with programs which had menus that could be parsed using a keyboard instead of a mouse.

Eventually new avenues were created for UI research. Continuing onwards from textual interfaces to the WIMP interfaces to the world wide web where objects on the web became entities accessible through a Uniform Resource Identifier (URI). Such an entity could possibly have Semantics associated with them too (as defined by Web 2.0). However, with the advent of mobile smart-phones we saw a completely different class of user interfaces. The touch-based user interfaces and its more evolved cousin the multi-touch systems which allowed gesture based interactions.

This was the first time in computing history that humans were able to directly interact with an object on their device with their hands instead of using an input device. The experience was immersive but yet these objects had not entered into the real world. We were on precipice of a revolution in computing.

This revolution was the mainstream launch of Wearable Technology and Virtual/Augmented Reality and Optical Head Mounted Display devices with the creation of devices like the Oculus Rift, Google Glass and EyeTap among others. These devices allowed voice inputs and created a virtual or an augmented reality world for it’s user. Microsoft too was working on gesture based interactions with the Kinect device and research in the Natural User Interface (NUI) field. Couple of interesting works worthy of taking a look from this revolution are listed below.

This talk by John Underkoffler demos a UI that we saw in the movie Minority Report. He talks about the spatial aspect of how humans interact with their world and how computers might be able to help us better if we could do the same with our computers.

Here Pranav Mistry, currently the Head of the Think Tank Team and Director of Research of Samsung Research America, speaks of SixthSense. A new paradigm in computing that allows interaction between the real world and the digital world. All these works were knocking on the doors of a computer as we saw in the 2002 movie mentioned earlier, a real life holographic computer. Enter Microsoft HoloLens!

What is Microsoft HoloLens?

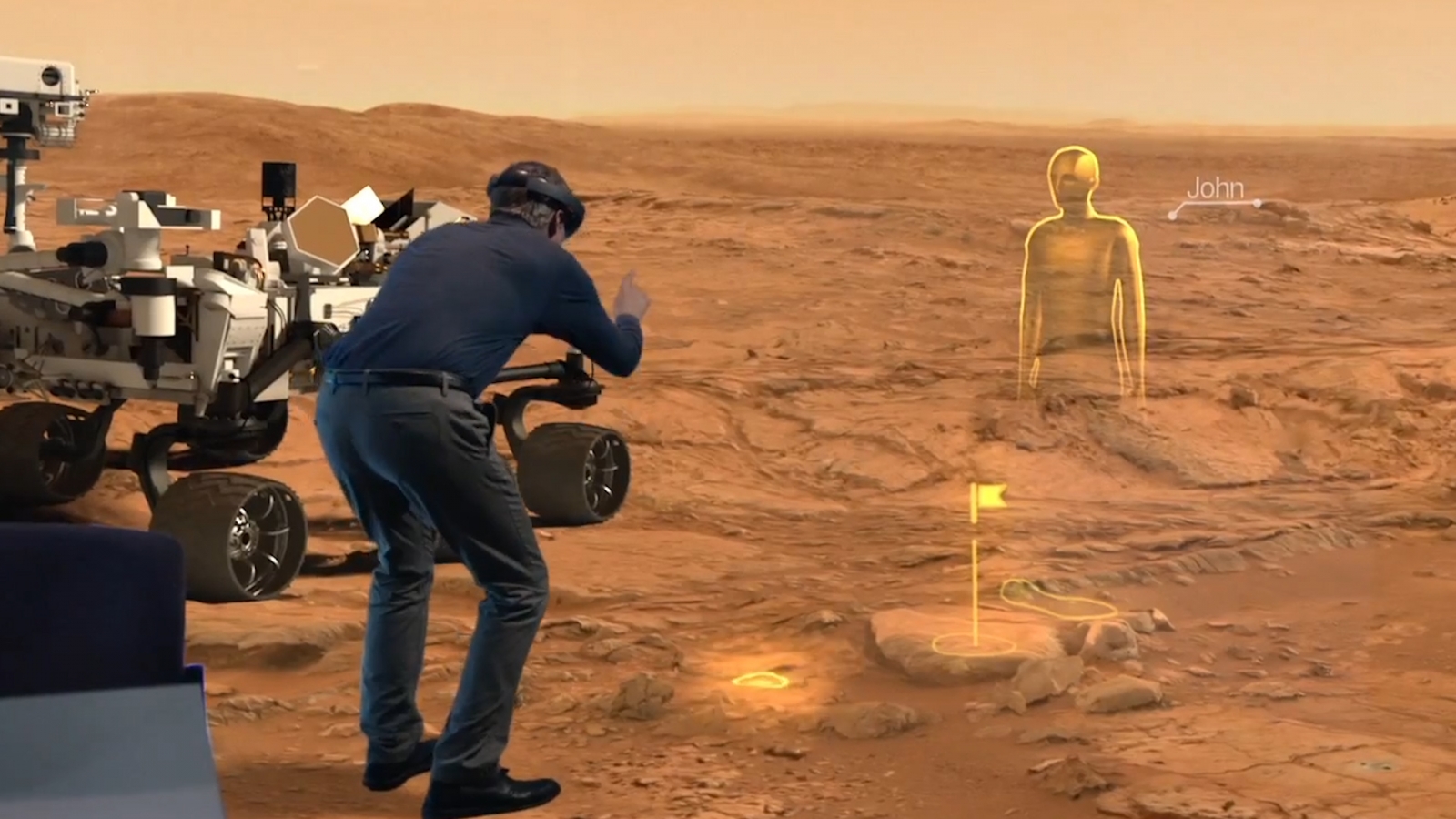

Microsoft HoloLens is an augmented reality computing platform. As per the review from Forbes.com this device has taken a step beyond current work by adding to the world around its user, virtual holograms, rather than putting the user in a completely virtual environment. This device has launched a new platform of software development, i.e. Holographic apps. As well as, the device has created a scope for hardware research and development, as it requires new components like the Holographic Processing Unit or HPU. Visualization and sharing of ideas and interaction with the real world can now be done as envisioned in the TED talk by Pranav Mistry. A more natural way of interacting with digital content as envisioned in the works above are a reality now. The device tracks its user’s movements in an environment. It detects what a person is looking at and transforms the visual field by overlaying 3D objects on top of that.

What kind of applications can we expect to be developed for HoloLens?

When the touch UI became a reality developers had to change the way they worked on software. Direct object interactions as shown above had to be programmed into their applications. Apps for HoloLens would similarly need to handle use-cases of interactions involving voice commands and gesture recognition. The common ideas and their corresponding research implication that come to mind include:

- Looking up a grocery list when you enter the grocery store (context aware)

- Recording important events automatically (context aware computing)

- Recognizing people in a party (social media and privacy)

- Taking down notes, writing emails using voice commands (natural language understanding)

- Searching for “stuff” around us (nlp, data analytics, semantic web, context aware computing)

- Playing 3D games (animation and graphics)

- Making sure your battery doesn’t run out (systems, hardware)

- Virtual work environments (systems)

- Teaching virtual classrooms (systems)

Why or how could it fail?

Are there any obvious pitfalls that we are not thinking about? We can be rest assured that researchers are already looking at ways this venture can fail and for Microsoft’s own good we can be certain they have a list of ways they think this might go and if there are any flaws they are surely working on fixing them. However, as a researcher in the mobile field with a bit of experience with the Google Glass, we can try to list some of the possible pitfalls of a AR/VR device. The HoloLens being a tetherless, Augmented Virtual Reality (AVR) device could possibly suffer from some of these pitfalls too. The reader should understand that we are not claiming any of the following to be scientifically provable because these are merely empirical observations.

- The first thing that worried us while using the Google Glass was that it would sometimes cause us headaches after using it for couple of hours. We have not researched the implications of using the device by any other person so this is and observation from experience. Therefore one concern could be regarding the health impact on a human being with prolonged usage of an AVR device.

- The second thing that was noticed with the Google Glass was how that the device heated up fast. We know from experience that computers do get hot. For example when we play a game they get hot or we do a lot of complex computations they get hot. An AVR device which is being used for playing games will most probably get hot too. At least the Google Glass did after recording a video. Here we are concerned about the heat dissipation and its health impact on the user.

- The third observation that we made was that the Google Glass, showed significant sluggishness when it tried to accomplish computation heavy tasks. Will the HoloLens device be able to keep up with all the computations needed for, say, playing a 3D game?

- The fourth concern is regarding battery capacity. The HoloLens is advertised as a device with no wires, cords or tethers. Anyone who has used a smartphone ever knows the issues of the battery on the devices running out within a day or even half a day. Will the HoloLens be able to carry a charge for long or will it require constant charging?

- The fifth concern that we had was regarding privacy. The Google Glass has faced quite a few privacy concerns because it can readily take pictures using a simple voice command or even a non-verbal command like a ‘wink’. We have worked on this issue as part of our research product FaceBlock. Will the HoloLens create such concerns as this device too has front facing cameras that are capturing a user’s environment and projecting an augmented virtual world to the user.

The above lists of possible issues and probable application areas are not exhaustive in anyway. There will be numerous other scenarios and ways we can work on this new computing platform. There will probably be a multitude of issues with such a new and revolutionary platform. However, the hybrid of augmented and virtual reality has just started taking small steps now. With invention of devices like the Microsoft HoloLens, Google Glass, Oculus Rift, EyeTap etc. we can look forward to an exciting period in the future of Computing for Augmented Virtual Reality.