Picture Perfect

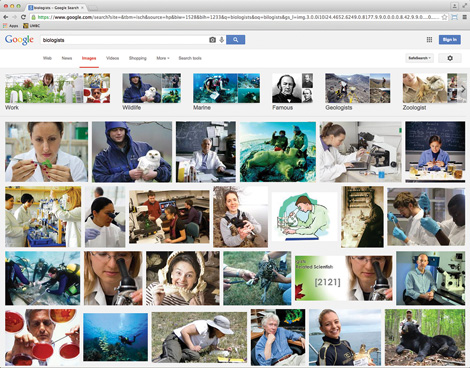

Quick: Women make up what proportion of biologists in the United States? According to federal statistics, the answer is almost exactly half: 50.1 percent. If you search for “biologist” on Google Images, however, only 35 percent of the top results are images of women.

The Google algorithm serves up similar distortions for computer programmers (23 percent in real life, but only 17 percent of top Google images) and chief executive officers (27 percent in real life, but only 11 percent of top Google results, one of which is “CEO Barbie”).

Those were among the findings of a much-discussed study released this spring by Cynthia Matuszek, assistant professor of computer science and electrical engineering at UMBC, and two colleagues from the University of Washington, where Matuszek recently completed her doctorate. Matuszek and her colleagues wanted to explore the ways in which algorithm-generated image-search results both reflect and reinforce popular stereotypes about how men and women should spend their lives.

The germ of the project, Matuszek says, came when she attended a conference presentation by a scholar working on robot caregivers for children. “He was referring to all of the grad students in his lab with male pronouns, even when he had pictures of his female grad students on the screen,” Matuszek recalls. “And when he introduced the concept of ‘caregiver,’ he used a clip-art image of a stereotypically motherly looking, smiling woman in a pink skirt suit. And I just thought to myself, ‘Really?’”

As she sat there, Matuszek started to wonder if image databases act as vectors for lazy gender stereotypes. With two University of Washington colleagues – Matthew Kay, a doctoral candidate in computer science, and Sean A. Munson, an assistant professor of human-centered design and engineering – she decided to put Google Images to some empirical tests.

The three scholars examined 45 occupations for which reliable data exist from the U.S. Bureau of Labor Statistics. They found that, on average, men were overrepresented across Google image search results relative to their actual prevalence in the work force. In highly female-dominated professions, however, Google image results tend to be even more skewed than the reality. (Ninety-five percent of image results for “librarian” are women, for example, versus 87 percent of the actual librarian work force.)

Matuszek’s team also examined gender differences in the degree of competence and professionalism portrayed in the Google search results. To explore that question, the team recruited a few dozen participants from Amazon’s Mechanical Turk system and asked them to rate workers in the images along eight different scales: “attractive,” “trustworthy,” and so on. It turned out that the workers in the images were rated as appearing more professional, competent, and trustworthy if they matched the profession’s gender stereotype. Female nurses, for example, were rated as more competent-appearing than male nurses; the opposite was true for construction workers.

In a final study, Matuszek and her colleagues examined whether image-search results could actually alter people’s perceptions of an occupation’s real-world gender balance. To test that question, they asked participants to estimate a profession’s gender balance, rate the profession’s prestige, and say whether they believe the profession is growing – all without viewing Google’s image-search results. Then, two weeks later, the same participants were shown Google’s search results and asked the same questions.

They found a small but significant effect: The image results did indeed tend to sway the participants’ estimates by at least a small degree. Here’s one example: In the real world, 55 percent of technical writers are women. But in Google’s image-search results for “technical writer,” only 35 percent of the images are of women. One of Matuszek’s participants initially estimated that 40 percent of technical writers are women – but then, two weeks later, after viewing the image search results, estimated the proportion at 35 percent, in line with what Google was showing. In this case, Google had led the participant deeper into error.

Does any of this matter? And, if so, what should be done about it? “We don’t make any recommendations in the paper,” Matuszek says. “We don’t necessarily say anything should change. We just want people who are designing these algorithms to be aware of the choices they’re making, and to know what kinds of effects these things can have.”

— David Glenn